Free June 13 Webinar on Protecting B2B Data in Automotive Environments

ATLANTA, June 11, 2012 -- /PRNewswire/ -- The need to protect automotive business data by modernizing B2B infrastructures to meet today's complex global collaboration demands will be the topic of a free one-hour webinar at 1 pm EDT on Wednesday, June 13. The discussion – "Secure and Protect Your Business Assets" – will be led by representatives of the Automotive Industry Action Group (AIAG) and global business integration software provider SEEBURGER.

Presenters will discuss risks to data security associated with technology challenges in emerging markets such as Brazil, Russia, India and China (BRIC), plus infrastructure challenges including dated technologies like FTP, lack of standards-based security, and OEM-to-OEM product lifecycle management (PLM) data exchange.

Also covered will be strategies for mitigating those risks including:

- Newer B2B protocols like OFTP/2

- Managed file transfer (MFT) technology enabling the exchange of large and/or sensitive files with full security, visibility, governance and regulatory compliance protections

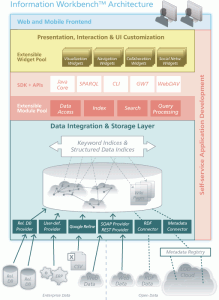

- Consolidated communications infrastructures such as the SEEBURGER Business Integration Suite, the first unified platform for securely managing all business, supply chain and trading partner file transfer activity with a common graphical user interface and single audit trail

Webinar presenters will be Akram Yunas, Program Manager for AIAG and former executive director of the Automation Society, and Brian Jolley, Information Technology Specialist for SEEBURGER, who has specialized in automotive information technology business solutions for the past 14 years.

Click to Tweet: Free June 13 Webinar on Protecting B2B Data in Automotive Environments – Presented by SEEBURGER and AIAG

About SEEBURGER

SEEBURGER is a global provider of business integration and secure managed file transfer (MFT) solutions that streamline business processes, reduce operational costs, facilitate governance and compliance, and provide visibility to the farthest edges of the supply chain to maximize ERP effectiveness and drive new efficiencies. All solutions are delivered on a unified, 100% SEEBURGER-engineered platform that lowers the total cost of ownership and reduces implementation time. With more than 25 years in the industry, SEEBURGER today is ranked among the top business integration providers by industry analysts, serves thousands of customers in more than 50 countries and 15 industries, and has offices in Europe, Asia Pacific and North America. For more information, visit http://www.seeburger.com/ or blog.seeburger.com/